11. Tensorflow Softmax

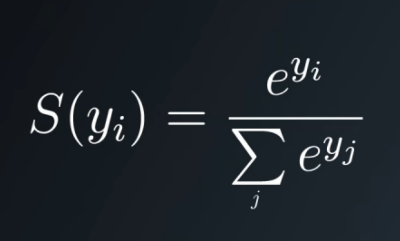

Softmax Function

TensorFlow Softmax

In the previous lesson you built a softmax function from scratch. Now let's see how softmax is done in TensorFlow.

x = tf.nn.softmax([2.0, 1.0, 0.2])Easy as that! tf.nn.softmax() implements the softmax function for you. It takes in logits and returns softmax activations.

Quiz

Use the softmax function in the quiz below to return the softmax of the logits.

Start Quiz:

Quiz

Answer the following 2 questions about softmax.